I try to keep an eye on the world of emerging AI technologies. It’s hard to keep up if you’re not completely involved in the sector, but some impressive examples pop up more regularly than ever. Some of those are incredibly easy to use by anyone. One section of the AI world that is garnering lots of attention is the wave of ‘art’ applications that can create unique imagery based upon plain text (or ‘natural language’) descriptions.

Deep Dream

Google’s Deep Dream was the first that I tried, back in 2015. Feed it an image and it would re-synthesise it in lots of frankly disturbing ways. You could choose some set styles, but they all ended up looking a bit odd. Still, it was fun to play with and the underlying technologies, embryonic at this stage, had loads of potential.

Wombo Dream

Fast forward a bit. While we’re winding forwards, transistor sizes get smaller, CPUs and GPUs accelerate, and AI gets better and faster. In 2021 I came across a new AI ‘art’ app called Wombo Dream. It claimed that it could create new pieces of art (even their domain name has the .art extension) based upon keywords and a style selector. Let’s see what “Stonehenge” in a 1980s-esque “synthwave” style looks like:

Interesting and fun. I created a few images that I liked as abstracts and used them as my phone’s background and lock screen for a while. I expect that the user usage curve sharply falls off after a few days. But a slick implementation of some great algorithms in the name of fun.

DALL·E 2

Today I came across DALL·E 2 from OpenAI. Their opening video begins with the dialogue “Have you ever seen a polar bear playing bass? Or a robot painted like a Picasso? Didn’t think so.”

In their own words:

DALL·E 2 is a new AI system that can create realistic images and art from a description in natural language.

As well as synthesising new images based on natural language input, it can also be used to manipulate existing images. In the intro video an example is shown of a dog sitting on an armchair. The dog is highlighted and replaced automagically with a “cute cat”. It can take an input image and create new variations in different angles and styles. True AI magic.

The DALL·E 2 system uses training and deep learning to understand the content of images, and how images in its training library of hundreds of millions of images relate to each other. What is refreshing about the video above is that OpenAI also acknowledge some of the pitfalls of image training. It will only be as good as the data underneath; how well (and correctly) the source images are described, and the breadth of subjects covered. It won’t be able to synthesise an image of something that is unknown to it.

From the end of the video:

DALL·E is an example of how imaginative humans and clever systems can work together to make new things, amplifying our creative potential.

I’ve not yet been able to try it out myself (I’m on their waiting list) but it looks like it could be fun to play with. I’m not sure how it will amplify my creative potential (to paraphrase the video) unless this blog was an outlet for surrealist imagery, but the technology underneath is has some profound uses beyond believable or downright odd images for meme creation.

Is it art?

Well, that depends. That depends upon you and your interpretation of art. Much of the lingo around these synthesised images uses the word ‘dream’ to suggest that the neural networks, machine learning and artificial intelligence are sentient in some way. They’re not. Even if some images are a little nightmare-ish, suggesting too much cheese consumption before bed.

If you feed information into an AI system and it creates an image that you like, or you see an AI-generated image that you like, then maybe it is art. That’s up to you. Some professional artists may have a less liberal interpretation. Especially when you consider that many of the training images used to create particular styles were created by professional artists. Spare a thought for them.

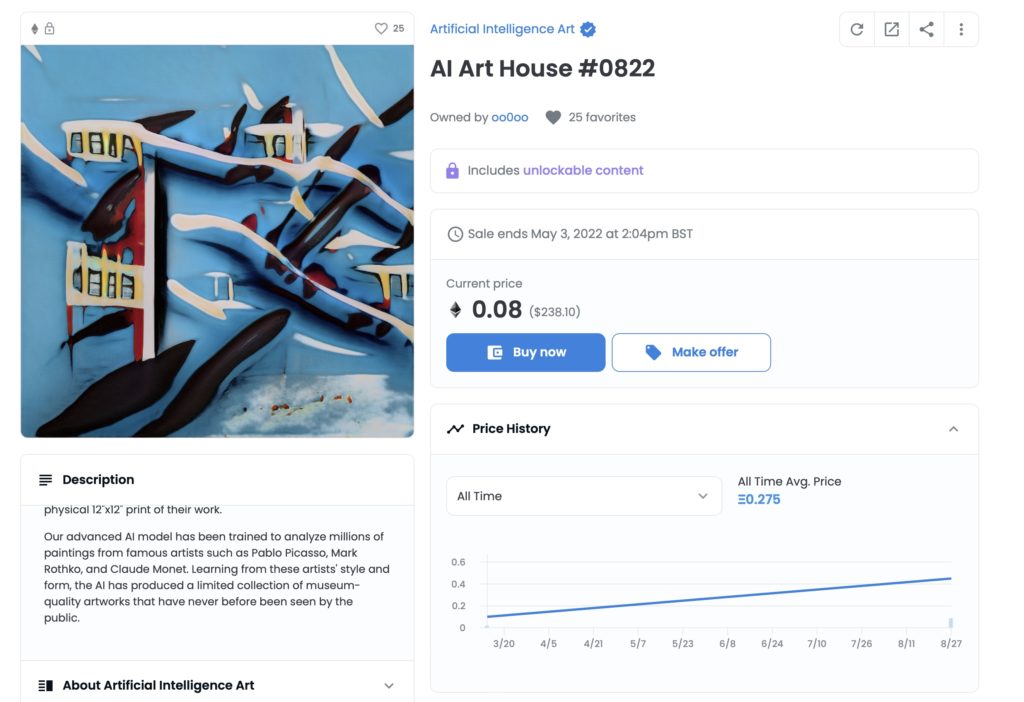

AI imagery is already for sale as NFTs and doubtless some people are earning good money (or crypto) from it.

What’s the point?

The exciting element to all of this, besides the creative potential, is the neural networks underneath. Their ability to recognise the scenes and objects contained in the photos in their training libraries will be invaluable in the future. Already, through the Google app or Apple’s Visual Lookup, I can use my phone’s camera to identify plants, pet breeds, you name it. It’s not always accurate, but that will get better.

Work has been underway for many years to train neural networks for archaeologists to help identify artefacts (such as classifying pot sherds), locate possible new sites via satellite imagery, help with petrological analyses, locate shipwrecks, read inscriptions and so on. I’m sure that every discipline will see computer vision approaches begin to help them in some way.

Of course, every technology can be used in ways that some disagree with too. Facial recognition without permission, the exploitation of workers in the data labelling industry – the list is probably very long.

With great powers comes great responsibility.

AI and computer vision are the next great step in computing. Even many of the phones in our pockets even have dedicated ‘neural engine’ processors enabling on-device machine learning.

Let’s see where this goes.